What is Gaussian Mixture Model (GMM)?

Short Answer

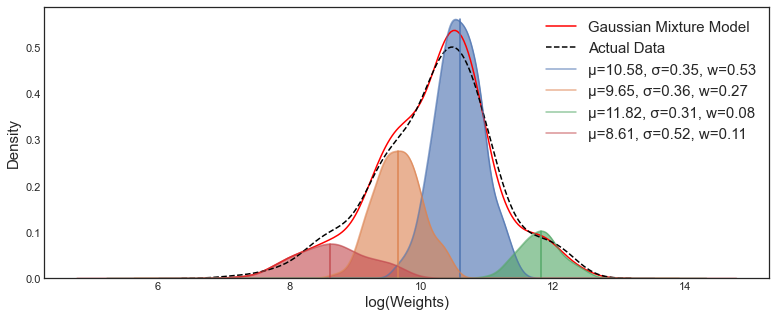

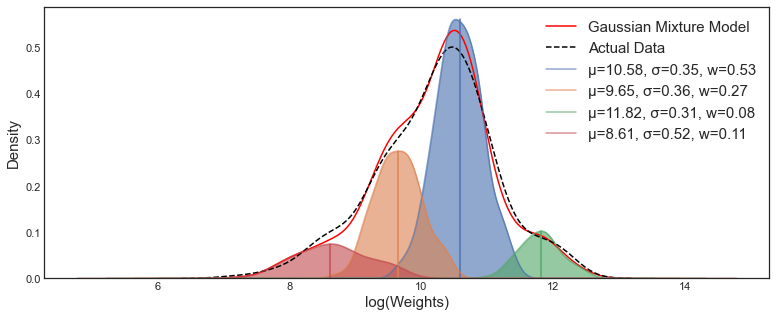

- A Gaussian Mixture Model (GMM) is a composite distribution made by \(K\) Gaussian sub-distributions each with its own probability distribution function represented by \(N(\mathbf{\mu}_ {i}, \mathbf{\Sigma}_ {i})\) with means \(\mathbf{\mu}_ {i}\) and variance \(\mathbf{\Sigma}_ {i}\). The mixture component weights are defined as \(\phi_{k}\) for component \(C_{k}\), with the constrain that \(\sum_{i=1} ^{K} \phi_{i} = 1\), therefore the probability distribution function of a GMM can be expressed as:

\[p(x) = \sum_{i=1} ^{K} \phi_{i} N(x \mid \mathbf{\mu}_ {i}, \mathbf{\Sigma}_ {i})\]

Long Answer

Gaussian Mixture Model

-

A Gaussian mixture model is parameterized by two types of values, the mixture component weights \(\phi_{i}\) and the component distribution parameters means \(\mathbf{\mu}_ {i}\) and variances/covariances \(\mathbf{\Sigma}_ {i}\).

-

The mixture component weights are defined as \(\phi_{i}\) for component \(C_{i}\), which satisfies \(\sum_{i=1} ^{K} \phi_{i} = 1\), so that the total probability distribution normalizes to 1.

-

If the component weights aren't learned, they can be viewed as an a-priori distribution over components such that \(p(x \textrm{ generated by component } C_{i}) = \phi_{i}\). If they are instead learned, they are the a-posteriori estimates of the component probabilities given the data.

-

Multivariate Gaussian Mixture Model

\[p(\mathbf{x})= \sum_{i=1}^{K} \phi_{i} N(\mathbf{x} \mid \mathbf{\mu}_{i}, \mathbf{\Sigma}_{i}) \]

\[N(\mathbf{x} \mid \mathbf{\mu}_{i}, \mathbf{\Sigma}_{i}) = \frac{1}{\sqrt{(2\pi)^ {K} | \mathbf{\Sigma}_{i}| }} exp \left( -\frac{1}{2}(\mathbf{x} - \mathbf{\mu_ {i}}) ^{T} \mathbf{\Sigma}_ {i} ^ {-1} (\mathbf{x} - \mathbf{\mu} _{i})\right)\]

\[\sum_ {i=1} ^{K} \phi _{i} = 1\]

Learning the Model

- If the number of components \(K\) is known, expectation maximization (EM) is the technique most commonly used to estimate the mixture model's parameters. You can find the details about EM at the link below.

https://dsworld.org/what-is-the-expectation-maximization-em-algorithm/

- Python code for GMM:

import numpy as np

from sklearn.mixture import GaussianMixture

gm = GaussianMixture(n_components=2, random_state=0).fit()

gm.predict(X_test)